Color transformations involve processing the components of a color image within a single color model, rather than converting between different models. In these transformations, each pixel of the color image is represented as a triplet (for RGB or HSI) or quartet (for CMYK) of values corresponding to the chosen color space.

Formulation of Color Transformations

Similar to intensity transformations, a general model for color transformations can be represented by:

Where:

- is the input color image,

- is the processed or transformed output image,

- is the transformation operator applied over the image’s spatial neighborhood.

In color images, the pixel values are groups of three (RGB, HSI) or four (CMYK) values. The transformation process often involves applying separate functions for each color component. For instance, in the case of RGB images:

Here, represent the color components of the input image at point , and are the transformed color components. If the chosen color space is RGB, and the three components are red, green, and blue. For CMYK, and the components are cyan, magenta, yellow, and black.

Example: Color Transformations in CMYK, RGB, and HSI

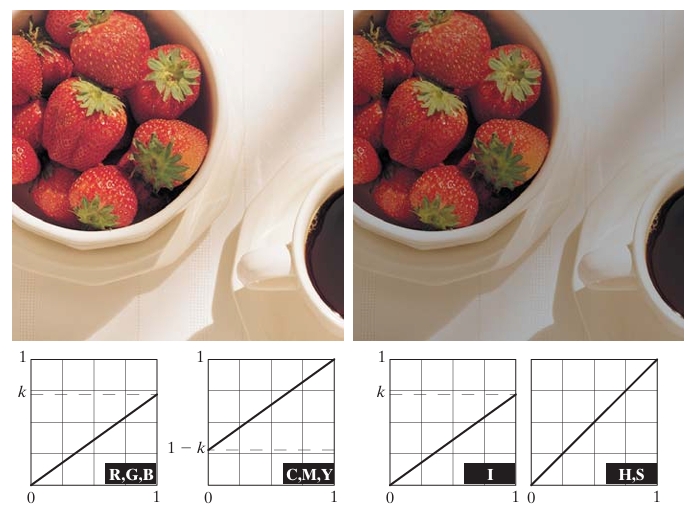

Consider a high-resolution color image of strawberries and coffee, digitized from a large-format color negative. The image is first represented in the CMYK color space, where each component (cyan, magenta, yellow, and black) contributes to the overall image. For example, the strawberries in the image show large amounts of magenta and yellow because these components are the brightest. When the image is converted to the RGB space, the strawberries appear predominantly red with minimal green and blue components.

In the HSI (Hue, Saturation, Intensity) space, the intensity component represents a monochrome version of the full-color image, and the strawberries show high saturation due to their pure red color. However, interpreting the hue component can be challenging due to discontinuities where 0° and 360° meet, and the hue is undefined for white, black, and gray colors.

Practical Example: Intensity Modification

Let’s say we want to modify the intensity of the image using the transformation:

This can be done differently depending on the color space. In the HSI color space, only the intensity component is affected:

In contrast, the RGB and CMYK spaces require transformations of all components. For RGB:

For CMYK:

Although the HSI transformation involves fewer operations, converting the image to HSI from RGB or CMYK is computationally intense. In this case, it may be more efficient to apply the transformation directly in the RGB or CMYK spaces.

(a) Original image.

(b) Result of decreasing its intensity by 30% (i.e., letting k = 0.7).

(c)–(e) The required RGB, CMY, and HSI transformation functions. (Original image courtesy of MedData Interactive.)

Example Output

When we apply an intensity transformation with , the result is a darker version of the original image, as shown in Fig. 6.31(b). The transformation functions are depicted graphically in Figs. 6.31(c)-(e), showing how each color component is modified independently.

Color Complements

Color complements refer to hues that are positioned directly opposite each other on a color circle. These complements hold a significant role in image processing, as they are analogous to grayscale negatives, which are helpful for enhancing details in dark areas of a color image. Particularly, color complements are effective when these dark regions dominate the image, allowing important features to be brought out.

Mathematical Concept of Color Complements

In the RGB color model, the complement of a color is determined by subtracting each color component from its maximum value. Since RGB values range from 0 to 255 (for an 8-bit system), the complement of any RGB color can be computed by:

Where:

- , , and are the red, green, and blue components of the original color,

- is the complement of the color.

This is essentially the same as the transformation used for grayscale negatives, but applied to each color component independently.

Example:

If you have an RGB color represented by , the complement would be:

In this example, the original color with a slightly bluish tone would become a more reddish tone after applying the complement transformation.

Visualizing Complements on a Color Circle

On a color circle, complementary colors are those positioned directly opposite each other. For instance:

- Red is opposite Cyan,

- Green is opposite Magenta,

- Blue is opposite Yellow.

When a color is complemented, its hue shifts to the opposite side of the circle, which changes its appearance significantly.

Color Complement in Image Processing

Consider the color image shown in Fig. 6.31 (a bowl of strawberries and a cup of coffee). When its complement is computed, the red strawberries turn cyan, and the black coffee becomes white. The result is reminiscent of a photographic film negative. This kind of transformation is particularly useful for enhancing fine details that may otherwise be difficult to discern in dark regions of the image, such as shadows.

Mathematical Example of RGB Transformation for Complements

In this example, let’s take a pixel from the image that has an RGB value of . To find the complement of this pixel, we apply the complement formula:

Thus, the complement of is .

Hue-Saturation-Intensity (HSI) and Complements

Unlike the simple RGB complement transformation, computing a complement in the HSI color model is not as straightforward. In RGB, the transformation of each component is independent of the others, but in HSI, components like hue and saturation are interdependent.

For example, the hue complement in HSI is obtained by shifting the hue value by 180° on the color wheel. However, this transformation may not be as simple as in the RGB model because the saturation and intensity of the image play important roles in the final output.

If we take an image with very low saturation (like grayscale or nearly grayscale), its complement may not be perceptible because the hue is poorly defined when the saturation is close to zero. In such cases, a direct HSI transformation for complements can result in unexpected or inaccurate outcomes.

This interdependence in HSI is explored further in the text and left as an exercise to show that the saturation component of the complement cannot be computed solely from the input saturation.

Practical Example: Color Complement Application

Let’s apply the complement transformation to an image. Suppose we have an image where a red object dominates (for instance, strawberries in the earlier example). The RGB value of a red pixel might be something like .

The complement of this red pixel would be:

So, the complement of a pure red pixel would result in , a cyan color. This transformation is often used to reveal details in the image that are difficult to see in the original due to the dominance of one color.

Color Slicing

Color slicing is a technique used in image processing that isolates and highlights a specific range of colors in an image. This method is particularly useful when the goal is to emphasize a particular object or region in a color image based on its color values. Color slicing can help highlight certain features in an image while reducing or completely suppressing the background or other irrelevant parts.

Mathematical Concept of Color Slicing

In color slicing, the goal is to retain a set of pixels whose colors fall within a specified range (called the “slice”) and suppress (darken or desaturate) all other colors. Mathematically, this is done by selecting a range of colors around a target color in a given color space (for instance, the RGB color space), and transforming the pixels that fall within this range.

The color slicing operation is represented as follows:

Where:

- is the color value at pixel in the input image.

- is the resulting pixel value at after color slicing.

- is the target color (the color we want to highlight).

- is a threshold that defines the tolerance for the color range around .

- is the distance between the pixel’s color and the target color in the chosen color space.

Example: Color Slicing in RGB Space

In the RGB color space, each pixel is represented by a triplet , where , , and are the red, green, and blue components, respectively. Suppose we want to highlight only red regions in an image. We select a target red color , which represents a pure red color.

We can then define a threshold to decide how much variation around this red color we will tolerate. For instance, if , any pixel whose RGB values are within a distance of 50 from will be kept, while others will be darkened or set to zero.

The distance between two colors in RGB space can be calculated using the Euclidean distance:

If this distance is less than , the pixel’s color is retained; otherwise, it is suppressed.

Example:

Consider an image where a red apple needs to be highlighted, and the background should be suppressed. Let the target red color be , and let the threshold .

If a pixel in the image has the color , we compute the distance to the target red color as:

Since this distance is less than 50, the pixel is retained.

If a pixel has a color like , the distance calculation would yield:

Since the distance is much larger than 50, this pixel would be suppressed or set to black.

Color Slicing in Different Color Spaces

Color slicing can be performed in different color spaces, including:

- HSI (Hue, Saturation, Intensity): In the HSI model, the target color is defined by its hue, and color slicing can be done based on hue ranges. This is often more intuitive than RGB for applications where color perception matters (e.g., human visual system).

- Lab color space: The Lab color space separates the lightness component (L) from the color components (a and b), making it ideal for color slicing based on perceptual color differences.

In the HSI color space, color slicing is typically performed based on the hue value. If you want to highlight a certain hue (e.g., red), you would specify a range of hues around the target hue. The advantage of using HSI is that it allows for easier manipulation of colors while keeping brightness and saturation separate.

Example in HSI Space:

If the target hue for a red object is (red), you can define a range of hue values between and . For example, with , any hue between and around red would be retained, and other hues would be suppressed.

Applications of Color Slicing

Color slicing has various practical applications:

- Medical Imaging: In medical images, certain color ranges (like those associated with tissue types or stains) can be isolated and emphasized to help with diagnosis.

- Object Detection: Color slicing can be used to isolate and detect specific objects in an image, such as detecting fruits (e.g., apples) in a scene based on their red color.

- Remote Sensing: In satellite imagery, certain natural features (like vegetation) have distinct color signatures, and color slicing can help in isolating these features for analysis.

- Digital Art: Artists use color slicing to create visually striking images by highlighting particular colors in a photograph or design while suppressing others.

Tone and Color Corrections

Tone and color corrections are essential processes in modern digital imaging workflows, allowing photographers, graphic designers, and image editors to fine-tune their images for optimal visual appeal and accuracy. These adjustments can be made using standard desktop computers in conjunction with digital cameras, flatbed scanners, and inkjet printers, transforming a personal computer into a digital darkroom. This process eliminates the need for traditional darkroom processing, as tonal adjustments and color corrections can be handled digitally, particularly in photo enhancement and color reproduction.

Importance of Monitors in Color Corrections

To ensure effective tone and color corrections, the transformations must be developed, refined, and evaluated on monitors. It is crucial to maintain a high degree of color consistency between monitors and output devices (such as printers) to ensure that the final output is accurate to the digital representation.

Color Profiles are essential in this process, helping to map each device’s color gamut to a universal color model. For many color management systems (CMS), the model of choice is the CIE Lab model*, also known as CIELAB. This color model relates the colors between devices and represents the entire visible spectrum, ensuring that the colors are perceived consistently across different displays and devices. It is colorimetric, meaning it is designed to ensure perceptual uniformity in color differences.

Mathematical Concepts Behind CIE Lab* Model

The Lab* model represents colors in three dimensions:

- L*: Lightness component.

- a*: Represents the red-green axis.

- b*: Represents the blue-yellow axis.

The components are defined mathematically as:

Where:

- , , and are the tristimulus values of the color in the image.

- , , and are the tristimulus values of a reference white point (typically white from a perfectly diffusing surface under standardized light, like D65 illumination).

- The function is defined as:

The transformation is based on the CIE chromaticity diagram, where and , standard values under D65 illumination, representing daylight.

Perceptual Uniformity

One of the main benefits of the Lab* system is perceptual uniformity: differences in color values (between hues) are perceived by the human eye as roughly the same amount of difference. This ensures that color corrections performed using this model will result in consistent visual results across various devices, from monitors to printers. This model is also device-independent, meaning the same values can represent the colors on any display or output device without the need for further transformations.

Example: Color Correction Using Lab*

Consider an image with significant tonal imbalance, where certain parts are overly saturated (too bright) and other areas are undersaturated (too dark). By adjusting the L* component (lightness), you can either brighten or darken specific areas to balance the intensity. Additionally, you can adjust the a* and b* components to correct color shifts (such as from red to green or from blue to yellow).

Suppose a print of an image appears too warm (too red-yellow). By adjusting the a* and b* values towards the negative side (green and blue), the tonal balance can be corrected, making the image appear more neutral.

Tonal Range and Tonal Adjustments

Another crucial aspect of tone correction is adjusting the tonal range of an image. The tonal range refers to the distribution of intensities in an image, from the darkest blacks to the brightest whites. Images with an uneven tonal range (such as high-key images, which have most information concentrated in high intensities, or low-key images, concentrated in low intensities) may require balancing to ensure that details are visible across the entire intensity spectrum.

This process often involves histogram equalization, where the histogram of pixel intensities is adjusted to stretch across the full range of possible values, thereby improving the contrast and revealing details in both the highlights and shadows.

Histogram Processing

Histogram processing is a fundamental technique in image processing, used primarily to enhance the contrast of images. The histogram of an image represents the distribution of pixel intensities, showing how often each intensity value occurs in the image. By analyzing and manipulating this histogram, we can improve the visual quality of an image, making details more visible and improving contrast.

1. What is a Histogram?

For a grayscale image, the histogram is a graph with:

- The x-axis representing the intensity levels (ranging from 0 to 255 for an 8-bit image).

- The y-axis representing the frequency of each intensity level (i.e., how many pixels in the image have that intensity value).

The intensity values range from 0 (black) to 255 (white) for an 8-bit grayscale image. The histogram provides a visual representation of how pixel intensities are distributed, which is essential for analyzing an image’s contrast and brightness.

2. Types of Histogram Manipulation

The two most common types of histogram-based processing are Histogram Equalization and Histogram Matching (Specification).

2.1 Histogram Equalization

Histogram equalization is a method to enhance the contrast of an image. It spreads out the most frequent intensity values in an image, making the image appear clearer and more balanced in terms of brightness.

Mathematical Concept

Given an image with possible intensity levels, the goal is to map each pixel’s intensity value to a new value based on its cumulative distribution function (CDF) in such a way that the resulting image has a roughly uniform histogram.

Let be the -th intensity level in the input image, and let represent the probability of occurrence of intensity level . The histogram equalization transformation function is defined as:

Where:

- is the total number of intensity levels (for an 8-bit image, ).

- is the normalized histogram of the image, i.e., the probability of each intensity value occurring in the image.

- is the new intensity value corresponding to after equalization.

Steps:

- Compute the histogram of the image.

- Normalize the histogram to create a probability distribution.

- Compute the cumulative distribution function (CDF).

- Use the CDF to map each intensity level to a new intensity value .

Example

Suppose we have an image with the following pixel intensities: [52, 55, 61, 59, 79, 61, 64, 64, 73, 85]. The histogram for these pixel values shows that intensities are concentrated in certain areas, so we apply histogram equalization.

- Step 1: Compute the histogram, showing how often each intensity appears.

- Step 2: Normalize the histogram by dividing by the total number of pixels.

- Step 3: Compute the CDF by summing the normalized histogram values.

- Step 4: Map the pixel values to new values based on the CDF to spread the intensity values evenly.

This process results in a more uniform distribution of intensities and improved contrast in the image.

2.2 Histogram Matching (Specification)

While histogram equalization enhances contrast, it might not always produce the desired effect. Sometimes, we want the output image to have a specific histogram shape (e.g., to match the style of another image). This technique is called histogram matching or specification.

Mathematical Concept

Let be the intensity levels in the original image, and be the intensity levels in the target image. The goal of histogram matching is to map the intensity values of the original image to the intensity values of the target image so that the histograms of the two images match.

The basic process involves:

- Compute the histogram and CDF of the original image.

- Compute the histogram and CDF of the target image.

- For each intensity level in the original image, find the corresponding intensity level in the target image using their CDFs.

Steps:

- Compute the histograms and CDFs of both the input and target images.

- For each intensity value in the input image, find the closest corresponding intensity in the target image using the inverse of the CDF.

- Replace each pixel intensity in the input image with the matched intensity from the target image.

Example

Suppose we have two images:

- The input image has a histogram that is concentrated in the lower intensity range (dark image).

- The target image has a histogram with a broader distribution of intensities (well-exposed image).

By applying histogram matching, we can adjust the input image’s histogram to match the target image’s histogram. This improves the brightness and contrast of the input image, giving it the appearance of the target image.

3. Applications of Histogram Processing

- Contrast Enhancement: Histogram equalization is widely used to improve contrast in low-contrast images, making them visually clearer and more detailed.

- Style Transfer: Histogram matching can be used to give one image the appearance of another, by matching their histograms.

- Preprocessing for Computer Vision: Many algorithms in computer vision benefit from images with uniform histograms, as it ensures that features in the image are distributed evenly.

4. Example of Histogram Equalization

Let’s take an image that has poor contrast, where most pixel values are clustered around a narrow range of intensity values. The steps for histogram equalization can be applied as follows:

Original Histogram: A typical low-contrast image might have its pixel intensities clustered between 50 and 150 (on a scale of 0-255). The histogram will show this as a spike in the midrange.

Histogram Equalization: By redistributing these pixel intensities using the cumulative distribution function, we spread the pixel values more evenly across the range from 0 to 255. The histogram after equalization will be flatter, and the image will have improved contrast.

5. Limitations of Histogram Processing

- Over-enhancement: In some cases, histogram equalization can over-enhance the contrast, causing noise or other unwanted artifacts to become more visible.

- Global Approach: Standard histogram processing techniques are global, meaning they treat all pixels in the image equally. This may not always be desirable, especially if different parts of the image require different adjustments. Local histogram processing techniques can address this limitation.

Additional details about Color Transformations

Color transformations are a critical aspect of digital image processing, enabling manipulation of color characteristics in an image. These transformations involve changing the color values of an image’s pixels in a systematic way to achieve effects like color correction, intensity adjustments, and enhancing specific features. In this article, we will discuss the basic principles, mathematical formulations, and examples of key color transformations, particularly focusing on color spaces such as RGB, HSI, and CIE Lab*.

1. Color Spaces and Representations

Before diving into color transformations, it is important to understand that different color spaces represent color in various ways. A color space defines how color is represented as a set of values or coordinates. The three most commonly used color spaces are:

- RGB (Red, Green, Blue): A device-dependent color model where each color is represented by combining different intensities of red, green, and blue.

- HSI (Hue, Saturation, Intensity): A more intuitive color model that separates the chromatic content (hue and saturation) from the luminance (intensity).

- CIE Lab*: A perceptually uniform color model where represents lightness, represents the red-green axis, and represents the blue-yellow axis.

2. Mathematical Formulation of Color Transformations

2.1 Linear Transformations in the RGB Color Space

In the RGB color space, each pixel in the image has three color components: red (), green (), and blue (). Color transformations can be linear or non-linear. One of the simplest forms of transformation is intensity scaling, where the intensity of each color channel is scaled by a constant factor.

For a linear transformation, the transformed pixel value can be represented as:

Where:

- is a transformation matrix (e.g., for scaling or rotation).

- is an optional bias vector (often used for translation or shifting).

- are the transformed color components.

Example: Intensity Adjustment

If we want to reduce the intensity of an image by 30%, we can scale the RGB components using a constant factor :

This transformation uniformly reduces the intensity of the image, making it darker while preserving the color relationships.

2.2 Color Transformations in the HSI Space

The HSI color model separates chromatic information (hue and saturation) from intensity, which is useful for applications like color-based segmentation, where we want to isolate colors without affecting intensity.

The conversion between RGB and HSI is given by the following transformations:

- Hue (H): Represents the type of color, and is calculated as:

- Saturation (S): Indicates the purity of the color, and is given by:

- Intensity (I): Represents the brightness, calculated as:

Example: Changing Intensity in HSI

To reduce the brightness of an image, we can modify the intensity component while leaving the hue and saturation unchanged:

This would darken the image while maintaining the color tone and saturation. For instance, setting reduces the intensity by 20%.

2.3 Color Transformations in the CIE Lab Space*

The CIE Lab* (or Lab) color space is perceptually uniform, meaning that changes in color are perceived uniformly by the human eye. This is ideal for tasks like color balancing and color correction.

- Lightness (L*): Represents the luminance or brightness of the color.

- a*: Represents the position between green and red.

- b*: Represents the position between blue and yellow.

The conversion between RGB and CIE Lab* involves an intermediate conversion to XYZ color space, which models human color perception. The formula for converting from XYZ to Lab* is as follows:

Where are the reference white tristimulus values, and the function is defined as:

Example: Color Balancing Using Lab*

To balance the color of an image, we can modify the a* and b* components without altering the lightness. For instance, increasing shifts the color towards red, while decreasing it shifts it towards green. Similarly, adjusting shifts the color along the blue-yellow axis.

By tuning these values, we can correct color imbalances, such as removing a yellowish tint from an image by decreasing .

3. Applications of Color Transformations

- Image Enhancement: Adjusting color intensity and saturation to improve visual appeal, making an image more vibrant.

- Color Correction: Used in photography and video post-processing to correct color casts, such as white balancing or fixing underexposed regions.

- Medical Imaging: Color transformations are used to highlight certain features in medical images, such as tumors or abnormalities.

- Remote Sensing: Used to enhance satellite images by applying color transforms to distinguish land from water, or vegetation from urban areas.

4. Practical Example: Adjusting Intensity in the RGB and HSI Spaces

Consider an image where the overall brightness needs adjustment, but we want to preserve color details. We can:

- Convert the image from RGB to HSI.

- Adjust only the intensity component.

- Convert the image back from HSI to RGB.

The advantage of this method is that the intensity is changed without affecting the hue and saturation, thus preserving the color integrity of the image while adjusting brightness.

References:

- Gonzalez, R. C., & Woods, R. E. (2008). Digital Image Processing (3rd ed.). Prentice Hall.

- Sharma, G. (Ed.). (2002). Digital Color Imaging Handbook. CRC Press.

- Poynton, C. A. (2012). Digital Video and HD: Algorithms and Interfaces. Elsevier.

- Robertson, A. R. (1977). The CIE 1976 Color-Difference Formulae. Color Research & Application, 2(1), 7-11.