Image segmentation is a fundamental task in image processing and computer vision, where the goal is to partition an image into multiple segments or regions. Each segment typically corresponds to different objects or parts of the scene. Color-based segmentation is one of the most popular approaches to segment an image, especially when color information is a key distinguishing feature between regions.

In this article, we’ll delve into how color-based image segmentation works, explain the mathematical concepts involved, and provide an example.

1. Color Spaces

To perform color-based segmentation, it’s essential to understand color spaces. A color space is a way to represent colors in a digital image using numerical values. The most common color spaces used for segmentation are:

RGB (Red, Green, Blue): A color is represented by three channels, each corresponding to the intensity of red, green, and blue components, with values typically ranging from 0 to 255.

- Mathematically, a pixel is represented as: where , , and represent the intensities of red, green, and blue, respectively.

HSV (Hue, Saturation, Value): A more intuitive color space for humans, especially when it comes to segmenting objects based on color.

Hue (H) represents the type of color (e.g., red, blue).

Saturation (S) represents the purity or intensity of the color.

Value (V) represents the brightness of the color.

Mathematically, a pixel in the HSV color space can be represented as:

where , , and represent hue, saturation, and value, respectively.

2. Thresholding for Segmentation

Thresholding is a simple yet effective method for color-based segmentation. The idea is to define thresholds for the color values to separate regions of interest from the background.

For example, in an RGB image, suppose we want to segment all red objects. We can set thresholds for the R, G, and B channels, such as:

Pixels that meet these conditions are considered part of the red object, while others are not.

In the HSV color space, we can apply a threshold on the hue to segment a specific color:

This method is effective for simple images with clearly defined colors but can struggle when colors overlap or when the lighting conditions vary significantly.

3. Clustering for Segmentation: K-Means Algorithm

Another method for color-based segmentation is to use clustering algorithms like K-Means. The idea is to group pixels with similar colors into clusters, where each cluster represents a different region.

The K-Means algorithm works as follows:

Convert the image into a feature vector: In color segmentation, each pixel’s color (in RGB or HSV) can be treated as a vector in 3D space. For an RGB image, the feature vector of a pixel is:

Choose the number of clusters, K: This defines how many segments you want in the image. For instance, if you expect three objects in the image, set .

Randomly initialize K cluster centroids: These are the starting points for the clusters in color space.

Assign each pixel to the nearest centroid: Using a distance metric (typically the Euclidean distance), assign each pixel to the closest centroid. The Euclidean distance for two pixels and is given by:

Recompute the centroids: For each cluster, calculate the mean of all the pixels assigned to that cluster, and update the centroid.

Repeat steps 4 and 5 until convergence: The algorithm continues iterating until the centroids no longer change significantly.

4. Example: Segmenting an Image of Fruits

Consider an image with three types of fruit: apples (red), bananas (yellow), and grapes (purple). We want to segment the image based on these colors.

Step 1: Convert the image into RGB space

Each pixel in the image is represented as an vector. For example, a pixel of an apple might be , a banana might be , and a grape might be .

Step 2: Apply K-Means Clustering

We choose , as we expect three main color regions. The algorithm clusters the pixels into three groups based on their RGB values.

Step 3: Segment the Image

After clustering, we label each pixel based on the cluster it belongs to. All pixels in the “apple” cluster will form the apple segment, all pixels in the “banana” cluster form the banana segment, and so on.

Step 4: Visualize the Segmentation

The result is a segmented image where the apples, bananas, and grapes are isolated into distinct regions based on their color.

5. Advanced Methods: Gaussian Mixture Models (GMM)

For more complex segmentation tasks, Gaussian Mixture Models (GMM) can be used. This approach models the color distribution of each segment as a Gaussian distribution. Each segment corresponds to a different Gaussian, and the image is segmented by determining the probability that a pixel belongs to each Gaussian distribution.

In the RGB color space, the probability that a pixel belongs to a segment is given by:

where:

- is the mean of the Gaussian (the average color of the segment).

- is the covariance matrix, representing the spread of the color distribution.

6. Evaluation Metrics

To evaluate the quality of the segmentation, we can use metrics like:

- Intersection over Union (IoU): This measures the overlap between the predicted segment and the ground truth.

- Accuracy: The percentage of correctly classified pixels.

- Dice Coefficient: A similarity measure between two sets of pixels, particularly useful for medical imaging segmentation.

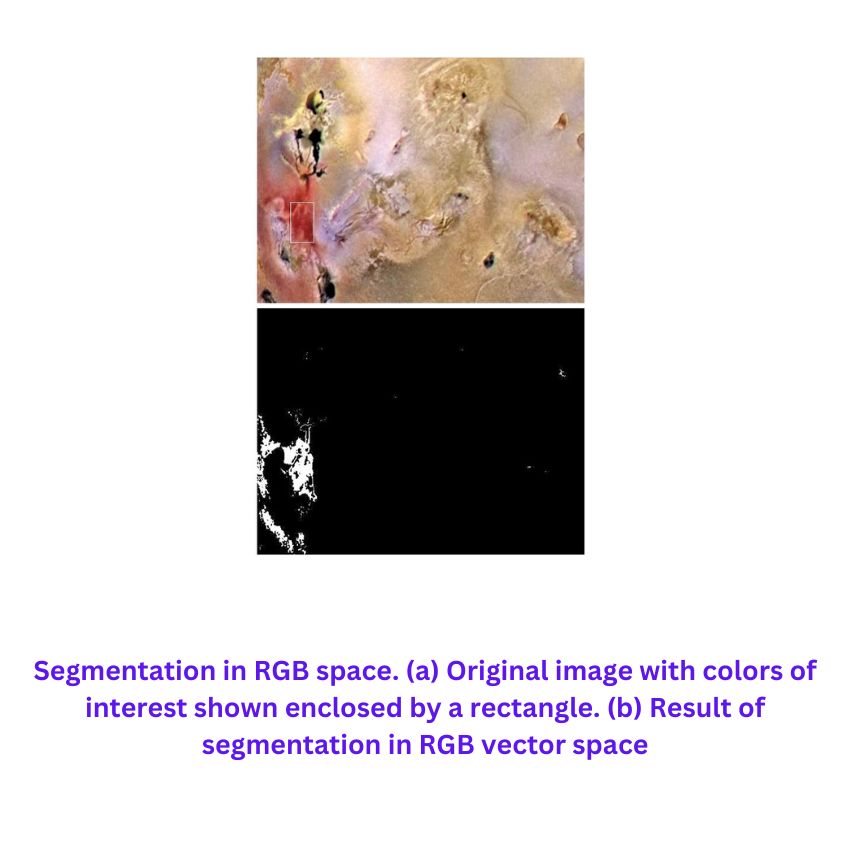

Segmentation in RGB Vector Space

The image you shared describes a process for image segmentation in RGB vector space using Euclidean distance and covariance-based measures to determine whether a pixel belongs to a specific color region. Below, I provide a more detailed breakdown of the concepts with full mathematical explanations based on the information shown in the image.

Segmentation in RGB Vector Space

Objective:

The goal of color-based segmentation is to classify pixels of an image based on their color values. The segmentation process aims to identify pixels whose color is similar to a pre-defined or average color that represents the region or object of interest.

Euclidean Distance in RGB Space:

In this method, each pixel in an image is represented as a point in a 3D RGB color space, where the axes correspond to the Red (R), Green (G), and Blue (B) components.

Let:

- represent the RGB values of a pixel in the image.

- represent the RGB values of the average color for the region we wish to segment.

To determine how similar the pixel color is to the average color , we calculate the Euclidean distance between them, denoted by , as:

This distance measures how far the pixel color is from the average color. If the distance is below a pre-defined threshold , the pixel is considered to belong to the target region. Otherwise, it is classified as background or part of another region.

Segmentation Criterion:

The locus of points such that the Euclidean distance forms a sphere of radius in the RGB color space. Points (pixels) inside this sphere are considered similar to the average color, while points outside are not.

The binary segmentation mask can be created by coding points inside the sphere as “foreground” (e.g., white) and points outside as “background” (e.g., black).

Generalized Distance Measure:

In more complex cases, we can generalize this distance formula to account for varying degrees of variance in different directions of the color space. This leads to the Mahalanobis distance, which takes into account the covariance matrix of the color samples.

The generalized distance formula is given by:

Where:

- is the covariance matrix of the RGB values of the sample color points (used to describe the distribution of the color data).

- is the inverse of the covariance matrix.

The locus of points where this distance is less than or equal to a threshold forms an ellipsoid in RGB space rather than a sphere. The shape of the ellipsoid reflects the variance of the color data in each direction (Red, Green, and Blue). Points inside the ellipsoid are considered similar to the average color.

Special Case: Identity Matrix

If the covariance matrix is the identity matrix (i.e., ), the generalized distance formula simplifies back to the standard Euclidean distance, as the matrix multiplication does not change the geometry.

Bounding Box Approximation:

To reduce computational complexity, instead of using ellipsoids or spheres, we can use a bounding box around the region of interest. The bounding box is an axis-aligned box in RGB space, where:

- The box is centered on the average color .

- The dimensions of the box are proportional to the standard deviation of the RGB values along each axis.

For example, for the red axis, the width of the box would be based on the standard deviation of the red values from the color samples. This approach avoids computing distances and simplifies the segmentation task.

Practical Implementation:

- For every pixel , we check if it lies within the bounding box, which is much simpler than checking if it is inside an ellipsoid or computing square roots as required by the Euclidean distance.

- This method provides a faster, but potentially less accurate, way to perform color-based segmentation, especially for real-time applications.

Color Edge Detection

The new image you’ve uploaded covers color edge detection and provides mathematical formulations to process the gradient of color images in the RGB color space. I’ll break down the key points and mathematical concepts for better understanding, based on the image you shared.

Color Edge Detection

Edge detection is critical for segmenting images based on the transitions between regions with different properties. In this section, the focus is on detecting edges specifically in color images by considering the gradients of the RGB channels.

Problems with Scalar Gradient:

Previously, gradient operators were introduced for grayscale images, where the gradient is defined for a scalar function. However, in color images, the RGB components represent a vector function. Applying gradient operators individually to each color channel and combining the results can lead to errors. Therefore, a new definition for the gradient is required when working with vector quantities like RGB color space.

Gradient in RGB Vector Space

Let the vectors represent unit vectors along the R (red), G (green), and B (blue) axes in the RGB color space. To compute the gradient, we define two new vectors that represent the partial derivatives of the image intensities:

- Vector : This represents the partial derivatives of R, G, and B with respect to :

- Vector : This represents the partial derivatives of R, G, and B with respect to :

These vectors capture how the color intensities change across the image in the – and -directions, respectively.

Computation of Second-Order Quantities

Next, we compute quantities that represent the changes in the vectors and , which correspond to the gradients of the color components. These are defined as dot products:

- : Represents the gradient of the image with respect to :

- : Represents the gradient of the image with respect to :

- : Represents the mixed derivative that combines changes in both and :

Direction of Maximum Rate of Change

The goal of edge detection is to identify areas where there is a sharp change in image intensity, which corresponds to the maximum rate of change in the image. The direction of the maximum rate of change at any point is given by:

This formula calculates the angle where the maximum change in image intensity occurs.

Magnitude of the Maximum Rate of Change

Once the direction is computed, the value of the maximum rate of change at a point , denoted as , is given by:

This equation combines the second-order derivatives to compute the magnitude of the change in image intensity, which is useful for detecting edges.

Interpretation and Application

This method extends the concept of the gradient from scalar functions (grayscale images) to vector functions (color images), allowing the detection of edges in color images. The technique described by Di Zenzo (1986) focuses on calculating the direction and magnitude of the gradient for color images, providing a more accurate representation of edges than traditional methods.

The last part of the explanation outlines that the derivation of the formula is quite involved and refers to further reading (the work by Di Zenzo) for a deeper understanding. The partial derivatives required for this computation can be obtained using well-known edge detection techniques, such as the Sobel operators.

References

- Gonzalez, R.C., & Woods, R.E. (2018). Digital Image Processing. Pearson.

- Di Zenzo, S. (1986). A note on the gradient of a multi-image. Computer Vision, Graphics, and Image Processing, 33(1), 116-125.

- Jain, A.K., & Dubes, R.C. (1988). Algorithms for Clustering Data. Prentice-Hall.