Prefix codes, also known as prefix-free codes, are a class of uniquely decodable codes where no codeword is a prefix of another. These codes are essential in data compression and communication systems because they enable instantaneous decoding without requiring lookahead or backtracking.

Definition and Properties

Prefix Codes

A prefix code is a set of codewords such that no codeword is a prefix of another. Formally, for a set of codewords over an alphabet , the prefix condition states:

where means is not a prefix of .

Example:

The code is a prefix code because no codeword is a prefix of another. In contrast, is not a prefix code because “0” is a prefix of “01”.

Properties of Prefix Codes

- Instantaneous Decoding: Since no codeword is a prefix of another, a prefix code can be decoded as soon as a codeword is encountered in the input stream.

- Uniquely Decodable: All prefix codes are uniquely decodable, but not all uniquely decodable codes are prefix codes.

- Kraft-McMillan Inequality: Prefix codes satisfy the Kraft-McMillan inequality: where is the length of the -th codeword and is the size of the alphabet.

Mathematical Foundation

Kraft-McMillan Inequality

Theorem:

For any prefix code over a -ary alphabet, the lengths of the codewords must satisfy:

Proof (Sketch):

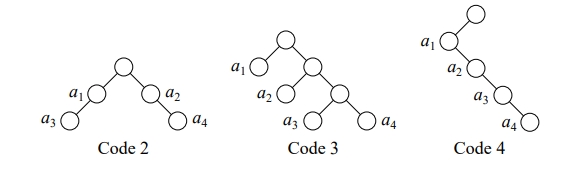

- Assign a binary tree structure to the codewords, where each node represents a decision.

- Each codeword corresponds to a unique path in the tree, and the lengths determine the depths of the nodes.

- The sum represents the fraction of the tree’s capacity used by each codeword.

- Since the tree cannot exceed its full capacity, the inequality holds.

If equality is achieved (), the code is maximally efficient.

Construction of Prefix Codes

Huffman Coding

Huffman coding is a popular method for constructing optimal prefix codes. It minimizes the average codeword length for a given set of symbol probabilities.

Algorithm:

- Begin with a list of symbols and their probabilities.

- Combine the two least probable symbols into a new node.

- Assign binary labels (e.g., 0 and 1) to the branches of the combined node.

- Repeat until a single tree remains.

Python Implementation

Example: Check Prefix Code

The following Python code checks if a given set of codewords forms a prefix code.

Example: Huffman Coding

The following Python code constructs a Huffman tree and generates prefix codes.

Applications of Prefix Codes

- Data Compression: Prefix codes are used in lossless compression algorithms like Huffman coding and Shannon-Fano coding.

- Networking: Efficient packet encoding in data transmission.

- Cryptography: Ensuring unique decoding in secure communications.

References

- Huffman, D. A. (1952). “A Method for the Construction of Minimum-Redundancy Codes.” Proceedings of the IRE.

- Cover, T. M., & Thomas, J. A. (1991). Elements of Information Theory. Wiley.

- Salomon, D. (2007). Data Compression: The Complete Reference. Springer.

- Kraft, L. G. (1949). “A Device for Quantizing, Grouping, and Coding Amplitude Modulated Pulses.”